3. Distributed deployment example¶

Deploying FRED on multiple servers brings at least two advantages:

increased performance,

access control on the network level.

Deploying on multiple physical servers is not the only distributed solution, deploying on virtual servers or separating tasks on the process level is also possible.

Nodes overview

Nodes in this document represent execution environments.

We work with the following nodes:

EPP node – EPP service

ADMIN node – web admin service

WEB node – public web services: Unix WHOIS, Web WHOIS, RDAP

HM node – zone management

APP node – application servers, CLI admin tools, pgbouncer, CORBA naming service

DB node – the main FRED database

LOGDB node – the logger database

Tip

Redundancy

This text does not describe redundancy options in detail, but here is a quick tip:

database replication is a standard technique to protect data,

the whole system can be replicated in several instances on different localities, which can substitute one another when one instance fails or during a system upgrade.

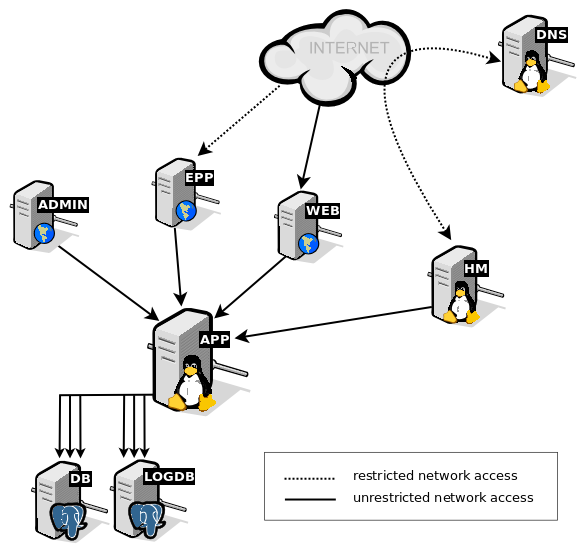

3.1. Network¶

Network rules are described per node in the following sections, but here is an overview of logical connections in the network (a single instance of the system).

Network – Logical topology¶

Restricted network access means that servers should be accessed only from IP addresses and ports allowed on a firewall.

Unrestricted network access means that servers can be accessed from any IP address, but only necessary ports should be open for access as illustrated in the network rules for each node.

3.2. EPP node¶

Provides: EPP service

Packages:

libapache2-mod-corba

libapache2-mod-eppd;

Network:

access to EPP (tcp, port 700) permitted only from particular IP addresses (or ranges) declared by registrars

Package |

Provided services |

Description |

Default config location |

|---|---|---|---|

|

None (is only an Apache module) |

Apache module that provides common functionality of CORBA communication for EPP and WHOIS Apache modules |

|

|

None (is only an Apache module) |

Apache module for parsing EPP commands and transforming them into CORBA calls to server (and vice versa) |

|

3.3. ADMIN node¶

Provides: Web administration interface

Network:

access to HTTPS (tcp, port 443) permitted only from the private network of the Registry

Docker image |

Description |

Default config location |

|---|---|---|

|

http webserver |

none, configuration example in fred/ferda/-/tree/master/docs/demo-deploy |

|

webserver gateway interface |

none, configuration example in fred/ferda/-/tree/master/docs/demo-deploy |

3.4. WEB node¶

Provides: Unix WHOIS, Web WHOIS, RDAP

Network:

access to HTTPS (tcp, port 443) permitted from anyone

access to WHOIS (tcp, port 43) permitted from anyone

Package |

Provided services |

Description |

Default config location |

|---|---|---|---|

|

No provided services (is only an Apache module) |

Apache module that provides common functionality of CORBA communication for EPP and WHOIS Apache modules |

|

|

No provided services (is only an Apache module) |

Apache module for parsing EPP commands and transforming them into CORBA calls to server (and vice versa) |

|

Docker image |

Description |

Default config location |

|---|---|---|

|

http webserver |

none, configuration example in fred/ferda/-/tree/master/docs/demo-deploy |

|

webserver gateway interface |

none, configuration example in fred/ferda/-/tree/master/docs/demo-deploy |

|

http webserver |

none, configuration example in fred/ferda/-/tree/master/docs/demo-deploy |

|

webserver gateway interface |

none, configuration example in fred/ferda/-/tree/master/docs/demo-deploy |

3.5. HM node¶

Hidden master for the DNS infrastructure.

Provides: zone file generation, zone signing, DNS servers notification

Network:

access to IXFR (tcp, port 53) permitted only from DNS servers

Package |

Provided services |

Description |

Default config location |

|---|---|---|---|

|

None |

System binary for zonefile generation |

|

3.6. APP node¶

Provides:

CORBA naming service (omninames) as a virtual server “corba”,

backend application servers,

CLI administration tools,

Tip

In addition to FRED components we recommend adding pgbouncer for database connection distribution.

Network:

only internal access from the private network of the Registry

Package |

Provided services |

Description |

Default config location |

|---|---|---|---|

|

|

FRED backend for accounting |

|

|

|

Administration interface daemon |

|

|

None |

FRED automatic keyset management client |

|

|

|

FRED backend for automatic keyset management |

|

|

None |

FRED fileman services interface definition files |

None |

|

None |

FRED logger services interface definition files |

None |

|

|

FRED server for database reports (gRPC) |

None |

|

|

FRED service for file management |

|

|

|

FRED logger services (gRPC) |

None |

|

|

FRED logger services (CORBA) |

None |

|

None |

FRED notify implementation |

|

|

|

FRED backend for public requests management |

None |

|

|

FRED registry core services (gRPC) |

None |

|

|

FRED backend service for DNS zone generator |

|

|

None |

FRED server interface definition files |

None |

|

|

FRED public interface daemon |

|

|

|

FRED registrar interface daemon |

|

|

None |

CDNSKEY records scanner |

None |

|

None |

Data validation and settings management using python type hinting |

None |

3.7. Database nodes¶

Database is separated into several nodes:

DB – the main database

freddb– data of all domains, contacts, registrars, history etc.LOGDB – the audit log (logger) database

logdb– logging of all user transactionsmessenger

secretary

FERDA

We have the logger database separately due to high workload.

Network:

accessed only by the backend server(s) from the APP node

Package |

Provided services |

Description |

Default config location |

|---|---|---|---|

|

None |

Database schema and example data for FRED |

None |

|

None |

PostgreSQL database server |

None |

3.8. Secretary node¶

Package |

Provided services |

Description |

Default config location |

|---|---|---|---|

|

|

Django app for rendering e-mails and PDFs |

|

|

None |

http web server |

None |

|

None |

webserver gateway interface |

None |

3.9. Messenger node¶

Package |

Provided services |

Description |

Default config location |

|---|---|---|---|

|

None |

FRED fileman services interface definition files |

None |

|

None |

FRED messenger services interface definition files |

None |

|

|

FRED service for sending and archiving messages |

None |